VIABAL

Virtual, Immersive, Augmented & Binaural Audio Lab

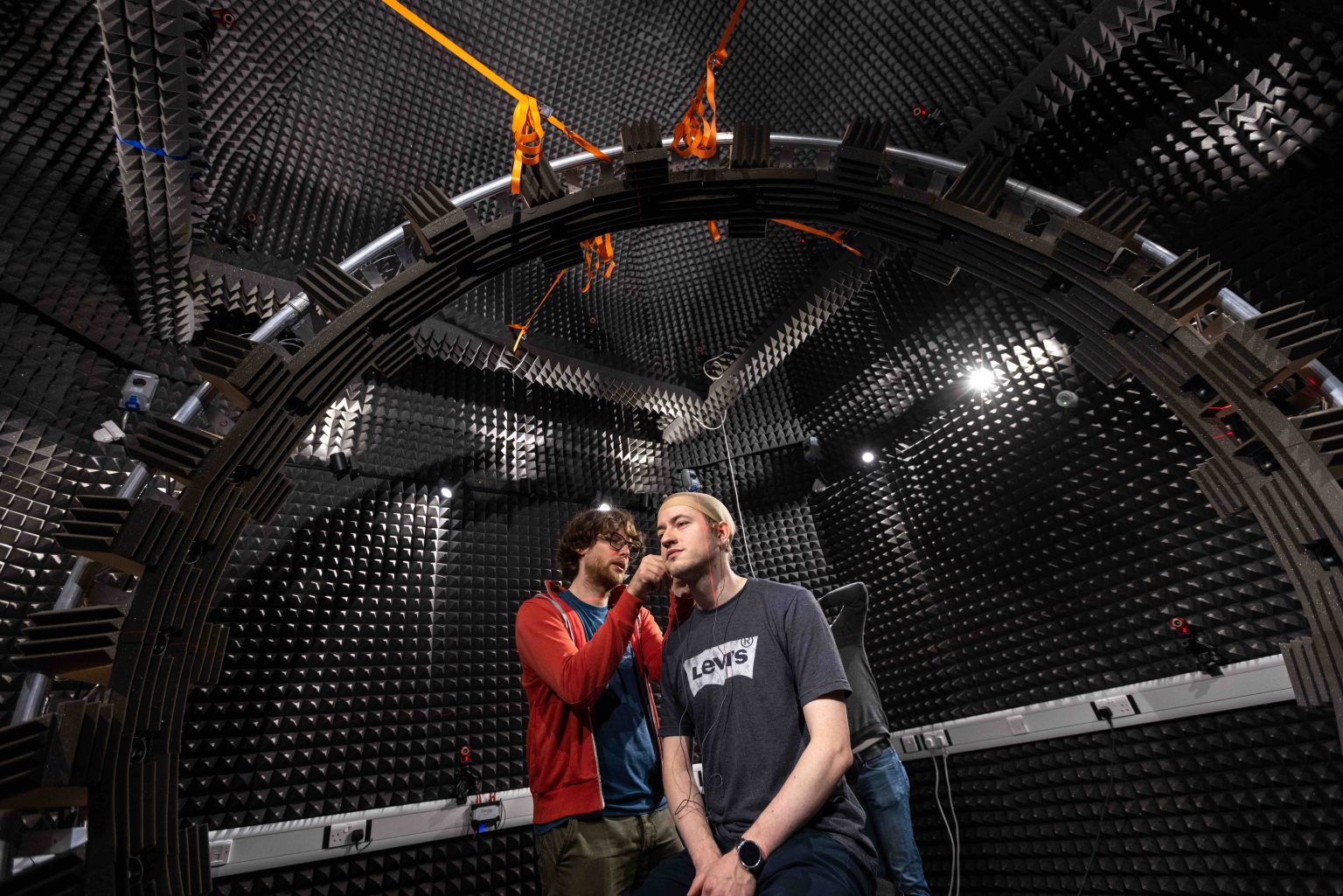

VIABAL is part of the Centre for Digital Music at Queen Mary University of London. We’re a group of people united by an interest in spatial audio in all its guises. We perform research at the intersection of signal processing, machine learning, acoustics and perception.

news

| Aug 19, 2025 | We are recruiting a fully funded UK PhD student at Queen Mary University of London (Centre for Digital Music at QMUL, Queen Mary School of Electronic Engineering and Computer Science) to develop AI-driven “superhuman” HRTFs that improve localisation and speech-in-noise for people with hearing loss. Full details & how to apply: https://www.findaphd.com/phds/project/?p186493 (Application Deadline: 30 Nov 2025) |

|---|---|

| Feb 14, 2025 | Announcing the Launch of VIABAL and Our New Website! Stay tuned for exciting developments. |

selected publications

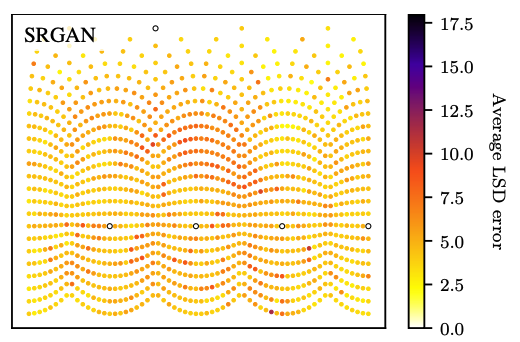

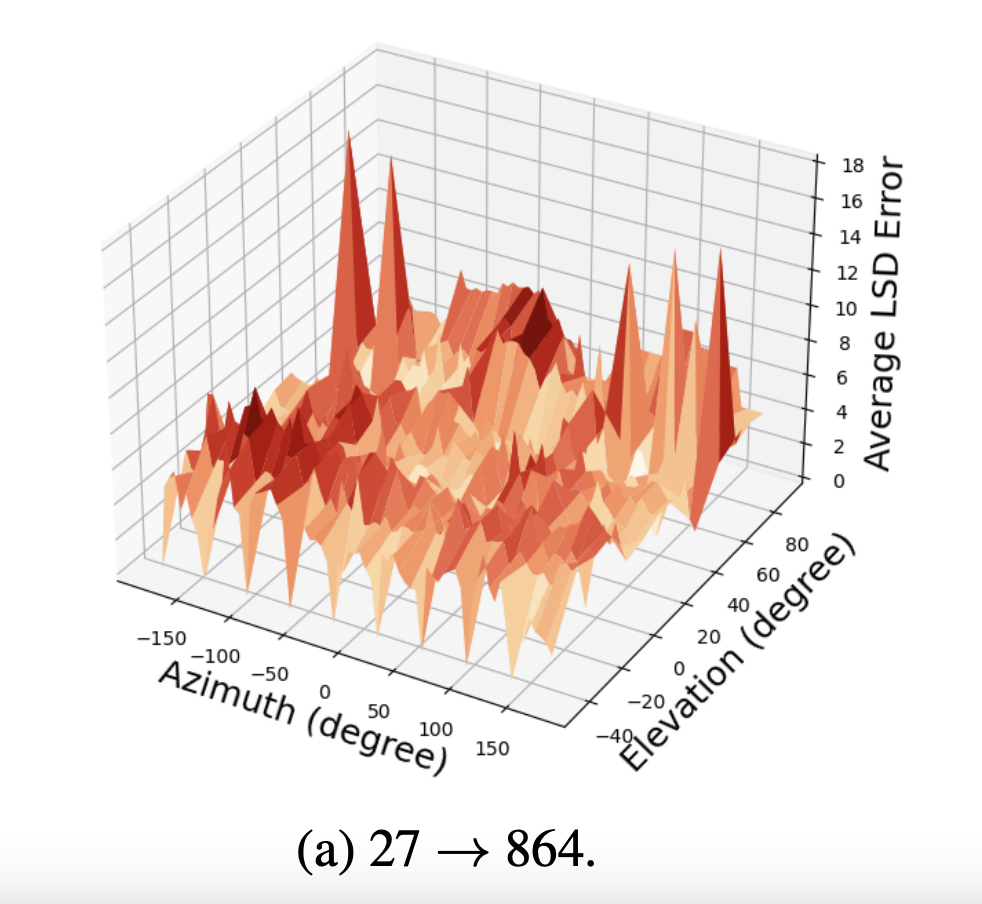

- Head-Related Transfer Function Upsampling Using an Autoencoder-Based Generative Adversarial Network with Evaluation FrameworkJournal of the Audio Engineering Society, 2025

- A Machine Learning Approach for Denoising and Upsampling HRTFsIn European Signal Processing Conference (EUSIPCO), 2025

- Listener Acoustic Personalisation Challenge - LAP24: Head-Related Transfer Function UpsamplingIEEE Open Journal of Signal Processing, 2025

-

HRTF Upsampling With a Generative Adversarial Network Using a Gnomonic Equiangular ProjectionIEEE/ACM Transactions on Audio, Speech, and Language Processing, 2024

HRTF Upsampling With a Generative Adversarial Network Using a Gnomonic Equiangular ProjectionIEEE/ACM Transactions on Audio, Speech, and Language Processing, 2024 -

HRTF Spatial Upsampling in the Spherical Harmonics Domain Employing a Generative Adversarial NetworkIn International Conference on Digital Audio Effects (DAFx), Sep 2024

HRTF Spatial Upsampling in the Spherical Harmonics Domain Employing a Generative Adversarial NetworkIn International Conference on Digital Audio Effects (DAFx), Sep 2024 - On The Relevance Of The Differences Between HRTF Measurement Setups For Machine LearningIn Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Sep 2023

-